All Categories

Featured

Table of Contents

Amazon currently typically asks interviewees to code in an online document file. Yet this can vary; it can be on a physical white boards or a digital one (How to Nail Coding Interviews for Data Science). Contact your employer what it will be and exercise it a great deal. Since you know what concerns to anticipate, allow's concentrate on exactly how to prepare.

Below is our four-step prep plan for Amazon data researcher candidates. Before spending 10s of hours preparing for an interview at Amazon, you need to take some time to make sure it's actually the appropriate firm for you.

, which, although it's designed around software program development, ought to give you an idea of what they're looking out for.

Note that in the onsite rounds you'll likely have to code on a whiteboard without being able to implement it, so practice writing with problems on paper. Offers complimentary training courses around introductory and intermediate maker discovering, as well as data cleansing, data visualization, SQL, and others.

Coding Practice

Ultimately, you can upload your very own concerns and talk about topics likely to find up in your meeting on Reddit's statistics and maker knowing threads. For behavior meeting questions, we advise finding out our detailed method for responding to behavioral concerns. You can then utilize that method to practice responding to the example questions provided in Area 3.3 above. Make certain you have at the very least one story or instance for every of the principles, from a wide variety of settings and tasks. An excellent means to practice all of these different kinds of questions is to interview yourself out loud. This may seem strange, yet it will dramatically enhance the means you interact your responses during an interview.

Depend on us, it works. Exercising by yourself will just take you thus far. Among the major difficulties of data scientist interviews at Amazon is communicating your different solutions in a manner that's very easy to comprehend. Consequently, we strongly advise practicing with a peer interviewing you. Ideally, a wonderful location to start is to practice with friends.

Nevertheless, be advised, as you may meet the complying with troubles It's hard to know if the feedback you obtain is exact. They're unlikely to have insider expertise of meetings at your target business. On peer platforms, individuals frequently waste your time by disappointing up. For these factors, lots of candidates skip peer simulated meetings and go directly to simulated meetings with a professional.

Algoexpert

That's an ROI of 100x!.

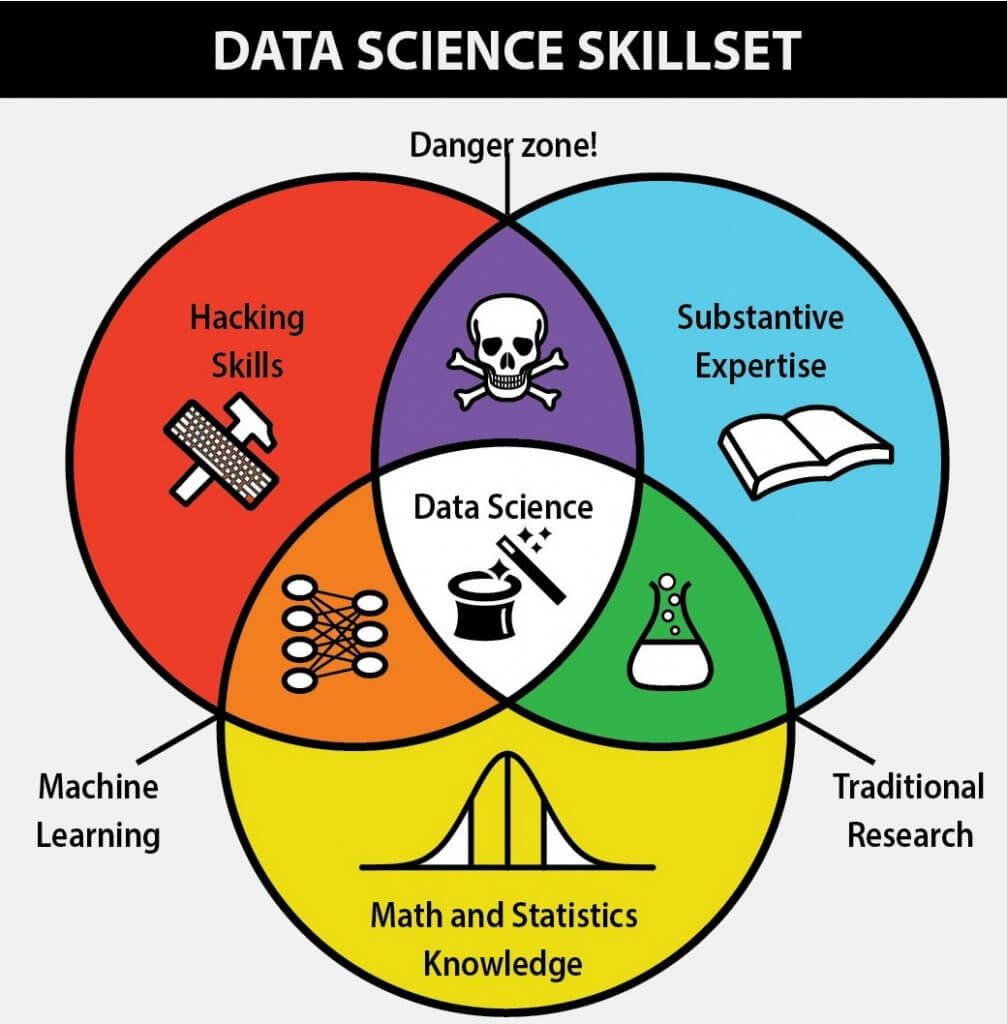

Data Science is quite a large and varied field. Because of this, it is actually difficult to be a jack of all professions. Generally, Data Science would concentrate on mathematics, computer technology and domain name know-how. While I will briefly cover some computer technology basics, the mass of this blog will mostly cover the mathematical fundamentals one may either need to brush up on (or even take an entire course).

While I understand many of you reading this are more mathematics heavy by nature, realize the bulk of data scientific research (attempt I state 80%+) is gathering, cleaning and handling data right into a beneficial type. Python and R are one of the most prominent ones in the Data Scientific research space. Nevertheless, I have actually also found C/C++, Java and Scala.

Coding Practice

Typical Python collections of choice are matplotlib, numpy, pandas and scikit-learn. It prevails to see the bulk of the data scientists being in a couple of camps: Mathematicians and Data Source Architects. If you are the second one, the blog will not help you much (YOU ARE CURRENTLY AWESOME!). If you are amongst the very first group (like me), opportunities are you really feel that creating a dual nested SQL question is an utter headache.

This may either be gathering sensing unit information, analyzing web sites or executing surveys. After gathering the data, it needs to be changed right into a useful form (e.g. key-value shop in JSON Lines files). As soon as the data is accumulated and put in a usable layout, it is necessary to carry out some data quality checks.

Real-world Scenarios For Mock Data Science Interviews

Nonetheless, in instances of scams, it is very typical to have hefty course inequality (e.g. just 2% of the dataset is actual scams). Such details is very important to choose the proper options for attribute engineering, modelling and version assessment. For even more details, inspect my blog on Scams Discovery Under Extreme Class Discrepancy.

In bivariate evaluation, each feature is contrasted to various other features in the dataset. Scatter matrices allow us to discover covert patterns such as- functions that must be crafted together- functions that may need to be removed to avoid multicolinearityMulticollinearity is in fact an issue for several versions like direct regression and thus requires to be taken care of as necessary.

In this section, we will explore some typical attribute engineering techniques. At times, the function by itself might not give useful details. Imagine utilizing internet use data. You will have YouTube individuals going as high as Giga Bytes while Facebook Carrier customers use a number of Mega Bytes.

Another concern is using categorical values. While specific worths prevail in the data scientific research globe, understand computer systems can just understand numbers. In order for the categorical worths to make mathematical sense, it requires to be changed into something numerical. Normally for categorical worths, it is usual to perform a One Hot Encoding.

Leveraging Algoexpert For Data Science Interviews

At times, having also many sporadic dimensions will obstruct the efficiency of the model. A formula generally made use of for dimensionality decrease is Principal Components Evaluation or PCA.

The usual categories and their below classifications are described in this area. Filter approaches are usually used as a preprocessing step. The choice of features is independent of any type of machine learning formulas. Rather, attributes are selected on the basis of their scores in different statistical examinations for their relationship with the result variable.

Usual techniques under this category are Pearson's Correlation, Linear Discriminant Analysis, ANOVA and Chi-Square. In wrapper approaches, we attempt to use a part of features and train a model utilizing them. Based on the inferences that we attract from the previous design, we choose to include or eliminate attributes from your subset.

Using Pramp For Advanced Data Science Practice

Usual approaches under this classification are Ahead Choice, In Reverse Elimination and Recursive Function Removal. LASSO and RIDGE are common ones. The regularizations are offered in the equations below as reference: Lasso: Ridge: That being stated, it is to understand the auto mechanics behind LASSO and RIDGE for interviews.

Overseen Understanding is when the tags are readily available. Without supervision Understanding is when the tags are inaccessible. Obtain it? Monitor the tags! Word play here planned. That being claimed,!!! This error is enough for the job interviewer to terminate the interview. Likewise, an additional noob error people make is not normalizing the features before running the model.

Direct and Logistic Regression are the many fundamental and typically used Device Discovering formulas out there. Prior to doing any evaluation One usual interview mistake people make is beginning their analysis with an extra complicated version like Neural Network. Criteria are crucial.

Table of Contents

Latest Posts

The Best Software Engineer Interview Prep Strategy For Faang

A Comprehensive Guide To Preparing For A Software Engineering Interview

Director Of Software Engineering – Common Interview Questions & Answers

More

Latest Posts

The Best Software Engineer Interview Prep Strategy For Faang

A Comprehensive Guide To Preparing For A Software Engineering Interview

Director Of Software Engineering – Common Interview Questions & Answers